This article was written on 31/05/2023 and reviewed on 04/03/2024. It may include some non-exhaustive information depending on the rapid evolution of Chat-GPT by OpenAI. It will then be reviewed several times to ensure that it remains up to date.

As coding and programming can now be carried out using LLM (Large Language Model) tools and generative AI, many professions are under threat in the medium term. Web development is one of them. How can we resist the temptation to replace a team of devs when just one person will be able to code with ChatGPT?

Artificial intelligence has been making considerable progress over the last few decades, to the point where many professions could disappear in the near future. The arrival of Low Code and NoCode had already caused an initial earthquake in the world of web development. Generative AI now looks like a veritable tsunami.

What will become of development teams when a single person with a perfect grasp of generative AI is all it takes to code websites and applications?

I] Chat GPT, the Generative AI tool that could make the developer’s job disappear

Generative AI is progressing so fast that the professional landscape may no longer be the one we know today. With Chat GPT, you can debug code, detect security flaws, code, develop in several programming languages, simulate a virtual machine, build web pages, develop applications…

So where does a web developer fit in when a free, open-source tool can do everything for him?

1.Chat GPT lets you generate code without any real knowledge of web development.

It’s a conversational tool capable of generating code very quickly, and developing programs in any programming language. It’s perfectly suited to creating relatively simple development bricks, as long as you give it a well-defined prompt. As it’s a chatbot, almost everyone understands how it works, so it’s within everyone’s reach.

2. Chat GPT can be used to create a website or a mobile application

With the right prompt, you can push Chat GPT even further.

Examples abound on the net, and it’s no secret that Chat GPT can code in JAVA, HTML/CSS or even Python using an OpenAI API key.

However, it’s rare for the first copy to be perfectly finished.

Nevertheless, it’s perfectly possible to rework it without being a web developer.

You can even ask him to revise some code in the event of a technical incident.

You can also ask him to rephrase his answers, as many times as necessary.

All in all, you’ll have the luxury of choosing between several versions of generated code, without having to have any specific knowledge of coding or programming.

When it comes to web and mobile application development, it’s perfectly possible to use Chat GPT by plugging it into other APIs to create more sophisticated applications. By installing the WebChatGPT plugin on your browser, for example, you’ll be able to obtain search results that take into account current events, and therefore current coding techniques, without being blocked to the year 2021 as was previously the case.

These improvements are regularly made by OpenAI, and are crucial if this tool is to remain the benchmark for LLM (Large Language Model) and generative AI.

Open AI has also developed another prompt-based image generation application called DALL-E3, an alternative to another famous open source AI: MidJourney.

By combining the two now-compatible AIs (Chat-GPT and DALL-E 3), you can create code, text and images for your projects, without any specific knowledge of copywriting, coding or graphics.

3. Developers have limited knowledge, Chat GPT no

Although developers have a solid knowledge of coding and programming, it is not comparable to an LLM-type language model.

This technology is trained on vast data and text sets.

It gathers millions and millions of pieces of data every day to refine its responses to queries.

This represents billions of words and combinations.

As a result, Chat GPT has been able to learn statistical models of the language, so that it can respond as humanely and accurately as possible to the questions it is asked.

That’s one of the reasons why Chat GPT can answer coding and programming questions in many, if not all, programming languages, which can sometimes require more knowledge for a web developer who doesn’t master them all in his work.

4. Chat GPT has computing power and execution speed that humans can’t match.

Its ability to restitute information, combined with its capacity to reformulate and then restitute responses, is unrivalled in human terms.

Apart from the fact that Chat GPT is able to respond to queries in the most human and structured way possible, its ability to find an answer to all existing topics is superhuman.

It takes no more than a minute to give a more or less precise answer to any question, on any subject. Its knowledge base isn’t quite “infinite”, but the field of possibilities already seems to be for this AI, especially for GPT4, which already far outclasses GPT3.

For your information, Chat GPT 3 takes into account 175 billion parameters.

Behind it all is Microsoft, with an initial investment of $1 billion in 2020 in OpenAI, essentially to create a world-class supercomputer from scratch, and with the aim of giving it the computing power needed to create and train it.

The American giant’s Azure platform will also give OpenAI a solid base from which to develop and launch GPT4.

In 2023, the renowned Bloomberg media reported that Microsoft would soon be investing $10 billion in OpenAI to accelerate its AI work.

II] Chat GPT is on the cusp of a technological revolution, but…

LLMs like Chat GPT are on the rise, but are faced with a number of technical, legal and ethical issues. Here are a few pointers to help you understand the main areas for improvement:

1. A framework is needed to ensure that data is processed in a virtuous manner.

LLMs can indeed have implications for the confidentiality and security of sensitive information such as medical, industrial and government secrets. Since these models have the ability to generate texts that can be very convincing, they could potentially overstep confidentiality and security limits.

This can be done by limiting access to these models and enforcing rules and regulations for their use, particularly for governmental organizations and businesses. Researchers and LLM developers also have a responsibility to consider the ethical implications of their work and to ensure that these models are used responsibly.

2. Chat-GPT uses the Internet as a whole, with all its shortcomings, to provide the most “probable” answers.

LLMs like Chat GPT can reproduce existing biases in the data they are trained on, potentially causing harm to marginalized or discriminated groups in society.

It turned out, for example, that Chat GPT was picking up information from Twitter, in particular by taking into account the comments of any Internet user replying to tweets, and thus picking up on all the excesses we can find on social networks, as Elon Musk revealed in a tweet.

This same Elon Musk was later joined by hundreds of world experts, including Apple co-founder Steve Wozniak, who recently signed a letter from the Future of Life Institute calling for a six-month pause in research into artificial intelligences more powerful than Chat-GPT4, OpenAI’s model, to prevent“major risks for humanity“. Perhaps a way of buying time to develop its own LLM project, initially named TruthGPT, then xAI and its cat named Grok ?

Elon Musk, a former member of OpenAI, now seems to be in complete disagreement with the orientation of ChatGPT’s founding company, asElon Musk recently filed a lawsuit against Sam Altman.

This complaint concerns the fact that the origin of the company he was part of should only be a non-profit research organization to advance science using AI for the benefit of all Internet users, and not a company with the aim of enriching itself, by getting closer to Microsoft…

Growing concerns about AI and LLMs have produced some notable first reactions, such as the resignation of Geoffrey Hinton, a pioneer of artificial intelligence research at Google.

He told The New York Times that he is concerned about the way AI is shaping the world today and tomorrow.

In the same interview, published on May 1,2023, he confides that“future versions of this innovation could be a risk to humanity“. Finally, he confesses to“regretting his contribution to this innovative field“.

The day after this interview, he confided in a new interview with the BBC that“chatbots are scary” and then declared of these chatbots that “At the moment, they’re not smarter than we are, as far as I know. But I think they will be soon. .”

These LLM models are now sparking debates on the ethical and social implications of automating the production of text, and therefore code, and more broadly, on how we should use this technology. Since an AI is not bound by professional secrecy, the question of Ethical AI arises very seriously.

3. GPT chat in the face of industrial, medical and government secrets

LLMs can have implications for the confidentiality and security of sensitive information such as medical, industrial and government secrets. These models have the ability to generate texts that can be very convincing. they could potentially exceed the confidentiality and security limits of any organization…

An LLM could be used to generate false medical reports or to access confidential industrial information.

Samsung, for example, paid the price, using the Chat-GPT platform to correct the source code for one of its applications while revealing confidential information, following revelations made by the famous Korean media Economist

Machine Learning therefore took this information into account in its learning as new variables that could be returned to anyone who came looking for the information.

Samsung has therefore blocked the use of Chat-GPT in its workplaces, for fear of leaking confidential information.

This situation also concerns other large companies such as Verizon and JP Morgan.

In the government sphere, an LLM could be used to bypass security protocols and gain access to classified information, or to spread disinformation via social media.

To address these risks, it’s important to have strict security protocols in place to limit access to these models and the information they contain. Governmental organizations and businesses may also need to strengthen their cybersecurity to protect themselves against potential LLM-related threats.

When it comes to professional secrecy, the loopholes that exist for companies are of the same origin as in the world of cybersecurity, and stem from human intervention. According to a study conducted by Cyberhaven, 11% of data entered by employees into Chat-GPT is confidential.

A mid-sized company (ETI) would therefore disclose confidential information to Chat-GPT hundreds of times a week, the problem being that Chat-GPT incorporates this material into its knowledge base, which then becomes accessible to the general public.

4. Backdoor uses of Chat GPT enable hackers to develop malware

As revolutionary as this technology is, generative AI is still in its infancy.

Yet it didn’t take hackers long to hijack Chat-GPT to create malware.

In the Chat-GPT 3 version, the ethical code defined by Open AI did not properly counter hackers’ social engineering techniques.

When Chat-GPT was directly asked to hack or hacker, or to submit hacking techniques, the AI replied that it could not be used for such purposes.

However, after many questions and requests, Chat-GPT 3 would eventually provide code snippets that cyber hackers could then use to create Trojans, or code snippets for phishing.

5. What’s the difference between human intelligence and artificial intelligence?

“We are biological systems and these are digital systems,” said Geoffrey Hinton about AI and chatbots.

Despite the immensity of the data taken into account by Chat-GPT, artificial intelligence still has many shortcomings compared to human intelligence.

Indeed, it reproduces certain biases present in the data it retrieves from the web and in the data it assimilates.

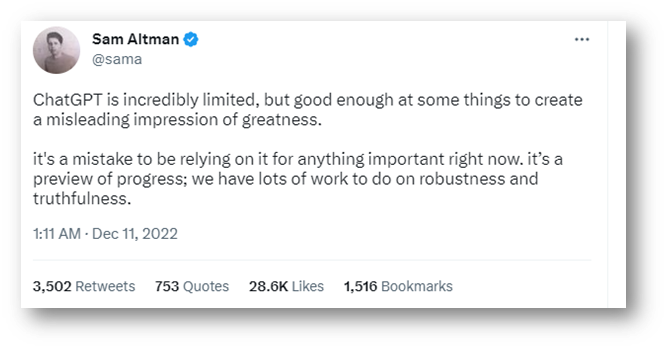

Sam Altman, the founder of Open AI, revealed in a tweet published in December 2022 that Chat-GPT was limited, thus taking a dim view of the accuracy of the information delivered by the chatbot.

These limits can be found on mathematical exercises, or even on medical subjects, leading the machine to make serious errors if it were to make a decision in place of man.

The main difference lies in emotional intelligence.

It lacks critical thinking, sensitivity and the ability to prioritize information.

If you talk about your expertise, and a neophyte talks about it right afterwards, Chat-GPT will average your two writings to come up with a version that everyone can agree on. The result won’t be up to scratch.

Chat-GPT’s latest updates on mathematics seem to show that the subject is being taken seriously, as fixes have been made to ensure that the AI makes fewer mistakes.

This is also the case for Python language comprehension (Read release notes February 13, 2024),

There are therefore many parameters for which Chat-GPT cannot replace a “real” professional, such as a web developer.

A neophyte could indeed produce code using Chat-GPT, but without knowing what he’s producing, he wouldn’t be able to tailor it to a company’s precise needs.

Although Chat-GPT4 is getting stronger every day, thanks to the knowledge accumulated by Internet users and its human training, there are still inaccuracies that don’t “yet” put professionals offside.

III] Pair programming: Chat-GPT is a tool for developers

While Chat-GPT doesn’t steal the show from development and programming experts, it is an excellent asset to help them in their work. Automate the most redundant tasks, review code, generate code that can be modified at the margin to meet simple development needs. And all this in several programming languages.

This is known as peer programming, where a man-machine pair works together as working partners on a task or set of tasks.

1. Chat GPT can generate code, but its quality depends on the type of programming language, and the complexity of the task.

Chat-GPT is an AI-based language model and can be trained on a large amount of textual data, including source code. This means it can generate code from input text, but the quality of the code generated can vary depending on the complexity of the task and the quality of the training data.

However, using Chat-GPT to generate code is intended for advanced users with a thorough knowledge of programming. It is important to understand that the generated code may require adjustments and modifications to ensure that it is efficient and functions correctly. It is therefore advisable to use Chat-GPT to generate code only as a starting point for programming projects, and not as a final solution.

2. How can Chat-GPT be used to solve cybersecurity problems?

While Chat GPT can serve hackers in the creation of their malware, or in social engineering, it can also serve honest causes, as can be the case in cybersecurity.

As Chat-GPT compiles information on an ongoing basis, it incorporates a certain history of web-based cyberthreats, as hackers do. This means it can learn from this historical data to identify suspicious behavior and threats on the web. It can also be used to provide partial or complete answers to digital security queries from Internet users or employees.

Once properly set up, it can detect spam and identify malicious links in your e-mail or messaging system.

It is therefore possible to use Chat-GPT as a starting point for identifying known vulnerabilities, in order to propose security measures to protect against them. Based on the cyberthreats studied and countered in the past, Chat-GPT is therefore able to provide known avenues of protection against certain dangers in your network, or more broadly, in your organization.

3. Chat-GPT is not (yet) adapted to specific environments

To date, the most technical and specific environments cannot be mastered by Chat-GPT. While Chat-GPT can build on what already exists, it cannot create new development techniques, new programming languages, or create expertise from scratch in place of human beings. By relying solely on what already exists, Chat-GPT also acts as a brake on hackers, who won’t be able to use Chat-GPT’s technology to create malware that doesn’t yet exist on the web.

4. Chat-GPT and its ethical limits

While it’s possible to ask Chat-GPT to imitate the writing style of a company or a human being, Chat-GPT can’t substitute itself for a moral or physical person, either for lack of sensitivity or for lack of personalization. When we say “code”, this time we mean code of conduct, code of ethics and code of behavior. A developer will therefore have to take code generated via Chat- GPT and adjust and adapt it to the codes of a company, with its style, jargon, expertise and critical mind, but also with its regulatory and legal environment.

This is why Bard, Google’s generative AI, and Claude 2, another generative AI, are still banned in France and several other countries. Because it doesn’t take into account the specificities and regulatory constraints of each country on a case-by-case basis, to ensure a service perfectly framed by the national legislation specific to each country.

For 100% French generative AI with performance on a par with the best American generative AI models, you can turn to “LeChat”, MistralAI‘s model.

5. Reinforcement Learning, or AI trained by humans

Chat-GPT stands for “Chat Generative Pre-trained Transformer”, which translates as “conversation” and “pre-trained generative transformer”.

This tool is trained using supervised learning andreinforcement learning techniques, two approaches that use human-created data to train the model, with the aim of providing the best possible answers to the queries it is given. By taking into account billions of pieces of information taken from the web, as well as from books of all kinds, Chat GPT also uses users’ own queries to learn and provide ever more appropriate answers. In this way, users help to shape Chat GPT on an ongoing basis.

Conclusion

Chat-GPT is a great tool that will help developers save time by automating the most redundant tasks, and acting as an assistant in code generation as well as code verification. Nevertheless, Chat-GPT is not yet capable of customizing code generation in the same way as an “in-house” developer. Human intervention is required to ensure that your code is not just lambda code, but that of your organization.

Even if framing prompts are well executed, and you ask it to put itself in the shoes of a typical organization, Chat-GPT has technical limitations.

As a result, it is not capable of coding specifically for projects that are unique and customized for your organization or your customer. As Chat-GPT relies on what already exists, it has no predictive capability, nor the ability to create code that has never been developed before.

This is why hackers have limited power to create malware with this tool.

Developers are therefore well advised to use Chat-GPT as an assistant, drawing on its computing and analysis power.

However, Chat-GPT in its current form is clearly not in a position to replace them.

As long as Chat-GPT remains solely a conversational tool, a developer will have to interact with it, and play the role of controlling what is produced by the AI.

Developers are still indispensable to the creation of unique, high-quality software, but they can make full use of generative AI such as Chat-GPT or GitHub Copilot Chat to help them in their day-to-day work.