Million-dollar problems – Spoiler: it’s not even a problem.

“Fairy tales for adults: from the boy who cried wolf to alert fatigue”.

Once upon a time, a young shepherd, tired of his monotonous life in the pastures, decided to amuse himself by pretending that a wolf was attacking his flock. He ran to the village shouting: “Wolf! Wolf!” The villagers rushed to his aid, only to discover that it was a false alarm and that the boy was mocking them. A few days later, he repeated the joke, with the same result.

But one evening, a real wolf attacked the sheep. The boy ran to the village, crying out desperately for help, but the villagers, thinking it was another joke, ignored him. Unfortunately, the wolf killed many of the boy’s sheep, and there was no one to help him.

This story, found in many cultures, not only teaches the consequences of lying, but also illustrates the concept of false positives and the alert fatigue they cause. Unlike the boy’s pranks, false positives in the field of safety result from a lack of detailed information or sufficient confirmation.

The dangers of false positives in modern industries

From finance to IT security, almost every sector is confronted with false positives. These erroneous alerts cause financial and reputational damage to companies, which lose tens of thousands of working hours every year.

Many people think that false positives are difficult to tackle. This is the case if you don’t properly understand the targeted or underlying systems, anomalies or malicious attempts. False positives not only hamper improved vulnerability and incident reporting, but also disrupt legitimate operations, real customer interactions and valid transactions.

Origins and implications of false positives

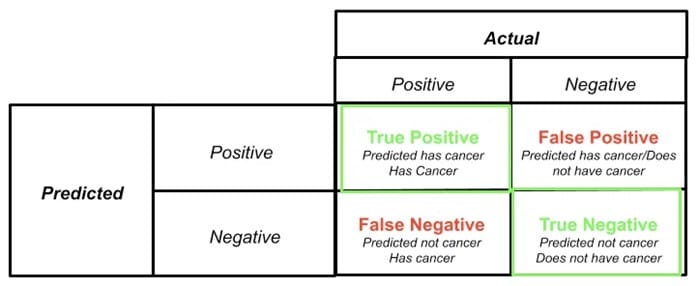

The terms “ false positives “, ” true positives “, ” false negatives ” and ” true negatives ” derive from statistical hypothesis testing and binary classification, commonly used in fields such as medicine, statistics and security operations. In the context of machine learning, these terms are used to assess the accuracy of tests and decisions. We will focus here on their importance in information security and incident response.

Understanding key terms

- True Positive (TP): This term refers to a correct prediction or positive result.

When your analysis tool reports an SQL injection vulnerability and you or the tool itself can confirm that the result is real and that the vulnerability exists, this result can be classified as a true positive.

You or the security tool/service you’ve used reports something, and that vulnerability is indeed present. You may be wondering what “as long as I can confirm…” means. Here’s where the problem arises: it takes a lot of human resources or technical capability on the part of the tool to confirm the existence of the vulnerability.

A clever formula or assumption can be applied here: if a vulnerability exists, it must be exploitable. If it isn’t, it’s probably not a vulnerability.

Does this remind you of Aristotle? You’re quite right! These are very basic logical rules of human intelligence. Sometimes, humans can get confused.

For such a basic logical process, why do many tools and services fail? The answer is simple: to reach this conclusion as a human being, you have to spend time on it (which is precisely one of the problems), and the tools and services need to know the inner workings of the systems very well.

- True negative (True negative): a true negative represents a correct prediction or negative result.

It indicates the correct identification of the absence of a relationship or condition that is really not present in the data.

For example, if you or a tool or service you’ve used, after a security check, haven’t found an SQL injection vulnerability on the website, and the website actually doesn’t have this problem, this finding can be considered a true negative. You have assessed a system with a negative rating for the existence of a vulnerability, and it is accurate. - False positive (FP): a false positive occurs when a test falsely indicates the presence of a condition that is not actually present; when the test falsely gives a positive result.

Let’s recall our first example: you or the tool/service you used reported an SQL injection vulnerability on your website.

In reality, this is not true.

To increase the severity of the example, let’s say your website doesn’t have a database connection, or the database behind the application isn’t a relational database. You can easily report this as a false positive. Basically, we’re saying that the result is false. - False negative (FN): a false negative is a test result that falsely indicates the absence of a condition that actually exists.

While false positives can be time-consuming, tiring and annoying, false negatives can be very risky and devastating.

Let’s say you ask your security team to scan your new web service for SQL injection vulnerabilities.

After a while, the staff member comes back to you and says he’s done his best but hasn’t found any SQL injection vulnerabilities in your web service. However, if there really is one, marking a discovery as “Not found” or negative can be misleading. This situation can be classified as a false negative. Again, while false positives require a lot of resources, time and patience, false negatives or marking a system as healthy when a vulnerability exists can be very dangerous.

The real damage caused by false positives

False positives can be extremely costly for organizations. False-positive investigations can require almost 10,000 man-hours a year, at a cost of around $500,000.

The impact of this fraud goes beyond information security. In e-commerce, false positives, or false rejections, represent a 443 billion dollars in lost revenue per year far exceeding the actual costs of credit card fraud. The incorrect blocking of legitimate transactions can potentially cost businesses up to $386 billion a year.

What’s more, false positives damage a company’s reputation. They lead to bad customer experiences, with legitimate customers turned away or transactions blocked. This can lead to loss of customers, 38% of consumers say they would change retailer after a false refusal .

Mitigating false positives: the cybersecurity team’s approach

False positives in security alerts are a major challenge for security analysts

Detailed explanations of the steps involved in processing PS are given below:

- Integration with Threat Intelligence: Integration with SIEM (Security Information and Event Management) solutions, which collect logs and data from various sources on the network.

This integration enhances SIEM capabilities by providing contextual information on potential threats, enabling analysts to understand attackers’ tactics, techniques and procedures (TTPs).

This reduces false positives and helps analysts focus on critical incidents.

- User-driven scoring approach: prioritize alerts according to severity and relevance, assigning scores to ensure that analysts focus on the most important threats, reducing the noise of false positives.

- Validation and whitelisting: validate indicators of compromise (IoC) and compare them with a whitelist. With over 43 million whitelist policies, filter out entities known to be benign, avoiding false positives. Analysts can also check the whitelist status of any IP address, hash or domain.

Implementing these measures effectively reduces the occurrence of false positives, enabling security teams to operate more efficiently and focus on the real threats.